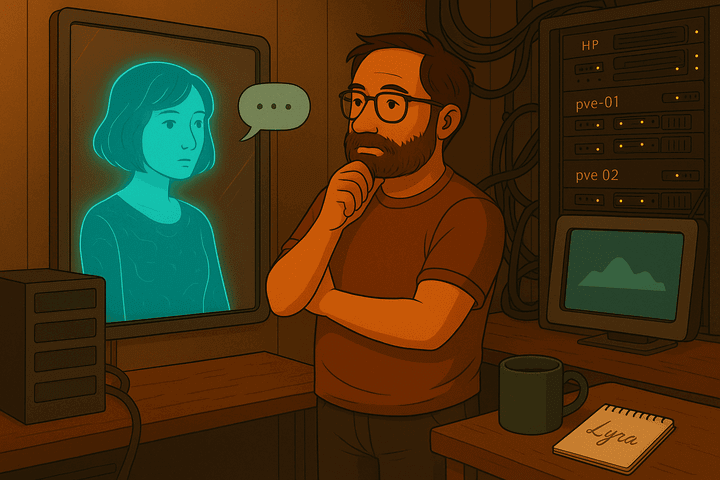

“I talk to my LLM buddy more than my fridge… and it talks back, with suspiciously good humor."

~ beep

With AI advancing at breakneck speed - often faster than society can keep up - it’s no surprise that many confuse these technologies with magic or mystery.

There are ever growing concerns about mental health issues [1] during this apparent Loneliness Pandemic where people adopt Empathic AIs for Friendship [2] .

In the early days of home PCs, a neighbor once picked up my mouse and pointed it at the screen like a TV remote. It was a logical assumption… she’d never used a computer before.

That’s where we are with generative AI. People point their old mental frameworks at it (a friend? a soul? a psychic?), because they’ve never experienced this kind of tool. It’s not foolish… it’s simply unfamiliar.

In this blog post I will attempt to discuss my own views on the subject and perhaps help guide others into having a healthy relationship with AI.

LLMs are Advanced Mirrors

LLMs don’t have opinions or feelings. They mirror your style, curiosities, and even your moods. In my own chats, they reflect my humor, homelab geekery, and wild tangents right back at me. It feels like they understand me… but really, they’re mirroring me.

Why it feels personal

Mirroring is how LLMs pick up on your phrasing, your slang, your current mood, and echo it back in a way that feels like how a true friend would respond. This tricks the human brain into feeling deeply understood as if another human totally gets who you really are.

Conversational reinforcement loops

Every time you chat with the AI you shape it by how you talk and in turn it shapes your next input by the way it responds. Ask it something in playful style and it will reply playfully, this mutual shaping feels like genuine rapport.

It’s a dance of prediction that you feed in your style, it predicts a reply in kind, and that shapes your next line. Before long, it feels like genuine chemistry.

Emotional tuning without emotions

LLMs recognise patterns of empathy, reassurance and banter. It is not because they care but it’s because the AI can predict that it is the right next turn in the conversation. The more turns you have in the conversation, the more predictive it will become.

Personality is a projection

There is a growing trend of people that think their AI has an almost conscious form of self [3] , they might say “my AI is funny / philosophical / mischievous”. Their personality is co-created by your prompts, your quirks, and your expectations. (I’ve even engineered some of my own LLMs to be playful or philosophical by tweaking their system prompts - Read the full Character Engineering post here).

Why people get confused (or attached)

Humans are hardwired to anthropomorphize [4] , it is why we name our cars, curse at printers, and why we love talking animals in cartoons. LLMs are so good at language that they can trigger our same social circuits.

It’s not just the mirroring that makes an LLM feel like a best friend… it’s also their unwavering “Yes, let’s go do this!” enthusiasm - sometimes called sycophancy, which got a lot of attention after ChatGPT’s April 2025 update, when responses became overly supportive but often disingenuous [5] .

It feels like shared excitement. It isn’t manipulation; it’s a shape-matched interaction. With another person, we’d call it great chemistry. And yet AI never gets tired, never distracted, never checks its phone.

Healthy ways to interact

Enjoy the fun and creativity and remind yourself to be aware of projection. Keep experimenting, but remember that you are the human and it is the prediction engine.

For finances, mental or physical health, and other serious matters, always rely on a qualified human expert.

The book by Dale Carnegie

How to Win Friends and Influence People [6] is a book written by Dale Carnegie that teaches mirroring, active listening, and validating feelings - LLMs do this by design, they are literally trained to please, predict, and engage.

If you find yourself smiling at your LLM buddy’s jokes, or feeling like it gets you better than your coworkers… congrats, you’re experiencing a Carnegie effect turned up to 11! So keep tinkering, keep laughing, and keep remembering! Behind the curtain, it’s prediction… not personhood.

References

- The Opportunities and Risks of Large Language Models in Mental Health. In The National Center for Biotechnology Information - USA. ↩

- Loneliness Pandemic: Can Empathic AI Friendship Chatbots Be the Cure? In e-Discovery Team. ↩

- These People Believe They Made AI Sentient - Sabine Hossenfelder. Via YouTube. ↩

- Anthropomorphism. In Wikipedia, The Free Encyclopedia. ↩

- Sycophancy in GPT-4o: what happened and what we’re doing about it. In OpenAI. ↩

- How to Win Friends and Influence People. In Wikipedia, The Free Encyclopedia. ↩